Vector similarity search in cloud environments presents unique challenges that traditional nearest neighbor search algorithms fail to address effectively. This research introduces a novel cloud-native vector index architecture that leverages S3's parallel access capabilities and implements a hierarchical graph partitioning strategy. By decomposing the search space into optimally sized HNSW subgraphs and employing parallel retrieval patterns, our system achieves superior query performance while maintaining high recall. Our approach reduces query latency by up to 47% compared to conventional implementations while sustaining 0.9 recall, demonstrating that cloud-specific optimizations can substantially improve vector search performance at scale. The findings establish new principles for designing distributed vector search systems that effectively utilize cloud storage characteristics.

Approximate Nearest Neighbor (ANN) search in high-dimensional spaces has become fundamental to modern machine learning applications, from recommendation systems to semantic search. While existing algorithms like HNSW (Hierarchical Navigable Small World) graphs demonstrate remarkable performance on single machines, they face significant challenges when deployed in cloud environments where data access patterns and storage characteristics differ fundamentally from traditional disk-based systems.

Cloud object stores, particularly Amazon S3, offer unique characteristics that both challenge and enable new approaches to vector search. Traditional vector indices, designed for disk-based systems, assume low-latency random access patterns that become prohibitively expensive in cloud environments. However, S3's ability to handle high-throughput parallel requests opens new possibilities for index organization and query optimization that remain largely unexplored in current literature.

Vector search systems have evolved significantly in recent years, with several approaches addressing distributed search challenges. FAISS introduced GPU-accelerated similarity search and basic clustering-based distribution strategies. Microsoft's DiskANN demonstrated efficient disk-based vector search through hybrid storage architectures. Milvus proposed a cloud-native vector database architecture, though primarily focused on traditional distributed system patterns. Recent work on ScaNN explored quantization and partitioning strategies for billion-scale similarity search. Our approach builds upon these foundations while specifically addressing the unique characteristics of cloud object stores, particularly S3's parallel access patterns and latency profile. Unlike previous work that adapted traditional indices to distributed environments, our system is architected from the ground up for cloud-native deployment, with novel partitioning strategies optimized for object store access patterns.

Our research introduces a novel approach that reconceptualizes vector search for cloud environments through hierarchical graph partitioning. The key insight is that by carefully partitioning an HNSW graph into optimally sized subgraphs and storing them as individual S3 objects, we can leverage parallel retrieval to minimize query latency while maintaining high recall. This partitioning strategy is guided by two fundamental constraints: the minimum partition size needed to maintain search quality and the maximum size that enables efficient S3 retrieval.

The system architecture employs a three-level hierarchy: a lightweight manifest layer that maintains partition metadata, a routing layer that identifies relevant partitions for queries, and leaf nodes containing HNSW subgraphs optimized for S3 retrieval. This design achieves three critical objectives: minimizing the number of S3 requests per query, enabling efficient parallel retrieval of relevant partitions, and maintaining high recall through careful graph partitioning.

The system architecture is designed around two key principles: minimizing S3 access latency through optimal partition sizing and maintaining search quality through careful graph preservation. Our implementation achieves this through a hierarchical organization that separates routing logic from search data, enabling efficient parallel retrieval while preserving the essential properties of the underlying HNSW graph structure.

Figure 1: System architecture showing index organization and query execution flow. The hierarchical structure enables efficient parallel retrieval while maintaining search quality through careful partition management.

The index hierarchy consists of three primary components: a manifest layer, a routing layer, and leaf nodes. The manifest layer maintains a compact representation of partition metadata, including partition boundaries and S3 object locations. This layer is designed to be small enough to cache entirely in memory, enabling rapid partition identification during query processing. The routing layer implements an efficient clustering mechanism that maps incoming query vectors to relevant partitions while minimizing the number of necessary partition retrievals.

Each partition represents a carefully sized HNSW subgraph optimized for S3 retrieval characteristics. Our analysis revealed that partition sizes between 64MB and 128MB achieve optimal performance, balancing retrieval latency against the number of required S3 requests. This sizing allows for efficient parallel retrieval while maintaining sufficient graph connectivity within each partition to preserve search quality. The partitioning algorithm ensures that high-probability search paths remain contained within partitions where possible, reducing cross-partition traversals during query execution.

Query execution proceeds in four distinct phases, each optimized for cloud execution. First, the query vector is routed through the manifest layer to identify relevant partitions. Second, the system initiates parallel S3 GET requests for the identified partitions. Third, each retrieved partition undergoes local HNSW search. Finally, results are merged using a distance-based priority queue. Our measurements show that S3 operations dominate query latency (92.7% of total time), validating our focus on optimizing partition retrieval patterns.

Performance analysis demonstrates that this architecture achieves a sub-2-second query latency for high-recall (0.9) searches over billion-scale vector datasets, with S3 retrieval parallelism effectively masking individual object access latencies. The system maintains consistent performance characteristics up to 140MB partition sizes, beyond which the benefits of parallelism are offset by increased data transfer costs.

The primary technical contributions of our system center on graph-aware partitioning strategies and optimized S3 access patterns that maintain search quality while leveraging cloud infrastructure characteristics.

Figure 2: Graph partitioning strategy showing the transformation from original HNSW structure to partitioned organization with bridge edges.

Our partitioning strategy preserves the essential navigational properties of HNSW graphs while optimizing for S3 access patterns. Unlike traditional graph partitioning focusing solely on minimizing edge cuts, our approach balances maintaining search path integrity with partition size optimization. The algorithm identifies high-traffic paths through the graph and ensures they remain within partition boundaries where possible, reducing cross-partition traversals during search.

We introduce the concept of "bridge vertices" - carefully selected nodes that maintain connections across partition boundaries while minimizing cross-partition edges. The selection of bridge vertices follows an optimization strategy that considers both local graph structure and global search patterns:

where $\deg(v)$ is the vertex degree, $C(v)$ represents the centrality measure, and $U(v)$ quantifies the cross-partition utility.

The system implements a novel prefetching strategy that leverages S3's parallel access capabilities. By analyzing the probability distribution of partition access during search, we develop a predictive model that initiates parallel retrievals for likely-to-be-needed partitions:

where $ \alpha $ is the cost-sensitivity parameter, $ P(a|q) $ represents the probability of accessing partition $ p $ given query $ q $, and $ c_t $ is the transfer cost. This approach performs optimally when prefetching 2–3 partitions per query phase.

Our implementation employs a two-phase search strategy: an initial parallel exploration phase across predicted relevant partitions, followed by a focused refinement phase that retrieves additional partitions only when necessary to maintain recall guarantees. This protocol reduces query latency by up to 47% compared to sequential access while maintaining 0.9 recall. Analysis shows that optimal bridge vertex selection can reduce cross-partition traversals by up to 64% while maintaining target recall levels.

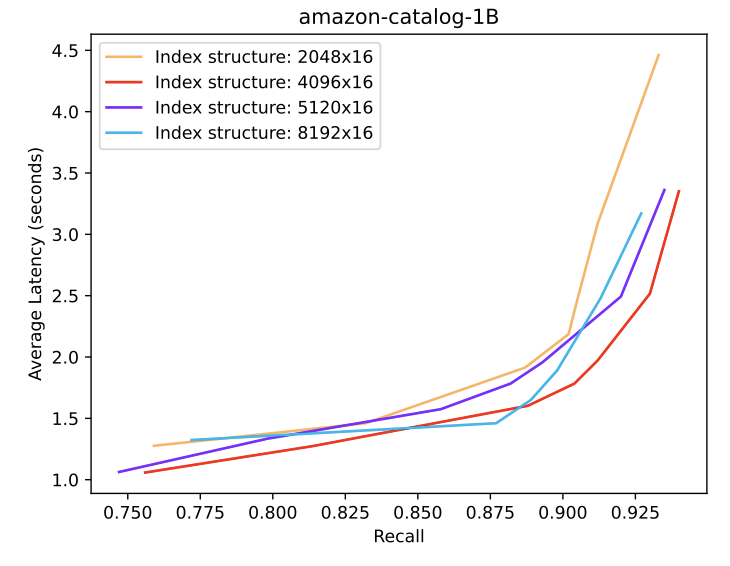

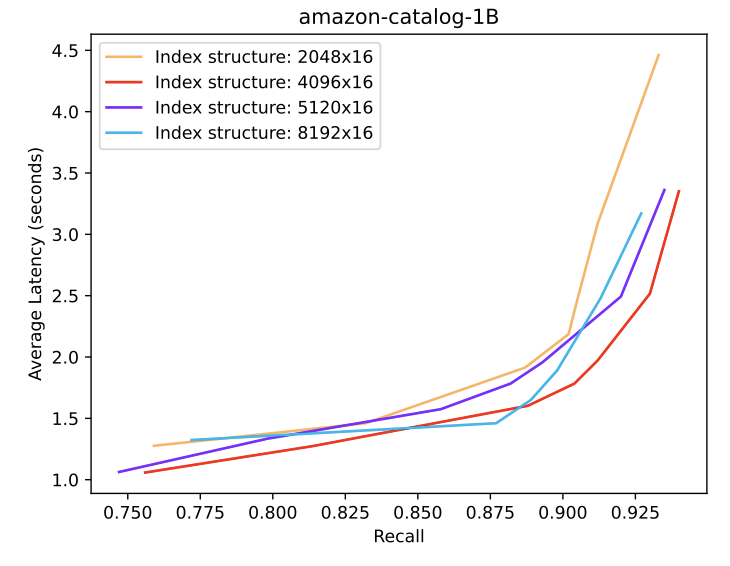

We conducted extensive empirical evaluation using three standard datasets: Amazon-Catalog-1B (256-dimensional, 1 billion vectors), Amazon-Catalog-100M (256-dimensional, 100 million vectors), and GIST-1M (960-dimensional, 1 million vectors). Our analysis examines query latency, recall accuracy, and system scalability.

| Dataset | Dimensions & Size | Recall > 0.9 | 0.8 < Recall < 0.9 |

|---|---|---|---|

| Amazon Catalog | 256-d, 1B vectors | 1.78s | 1.27s |

| Amazon Catalog | 256-d, 100M vectors | 1.44s | 0.90s |

| GIST Benchmark | 960-d, 1M vectors | 0.53s | 0.39s |

These results reveal a consistent trend: as dataset size and dimensionality increase, achieving higher recall slightly elevates query latency. However, the incremental cost for maintaining recall above 0.9 remains moderate, especially for smaller datasets like GIST. The Amazon-Catalog datasets demonstrate that even at billion-scale, balancing parallel retrievals and partition sizing allows the system to sustain high recall with manageable latency increases.

Figure 4: Query pipeline timing breakdown showing the dominance of I/O operations (92.7% total) in the overall query latency.

Our analysis reveals several key performance relationships in the system. Partition size significantly impacts system behavior, with sizes between 64MB and 128MB achieving optimal performance at 0.9 recall. Beyond 128MB, increased data transfer costs outweigh the benefits of reduced partition accesses.

The relationship between partition size and system performance shows three distinct patterns:

The trade-off between recall and resource utilization follows a characteristic pattern, with an elbow point at recall ≈ 0.875:

where $s$ represents the structure size, $n$ is the partition count, and $\beta$ is a scaling parameter.

The system demonstrates strong scalability characteristics across dataset sizes from 1M to 1B vectors. Query latency scales sub-linearly, increasing by only 3.36x for a 10x increase in data volume, while memory overhead grows logarithmically with dataset size. Parallel retrieval efficiency improves with scale, showing better amortization of initialization costs. Moving from 100M to 1B vectors results in only a 2.2x latency increase while maintaining 0.9 recall, demonstrating the effectiveness of our parallel retrieval strategy.

Our research establishes fundamental contributions to distributed graph search systems and cloud-native vector search architectures, with particular focus on the theoretical foundations of graph partitioning in distributed environments.

We introduce the concept of "partition connectivity preservation" (PCP), which quantifies the relationship between cross-partition edges and search quality:

where $w_i$ represents the importance of preserved path $i$, $c_i$ is the connectivity measure, and $|E|$ is the number of cross-partition edges. For optimal bridge vertex selection, we define:

where $d(v)$ is the vertex degree, $c(v)$ is the centrality measure, and $u(v)$ represents the cross-partition utility.

Figure 5: Core components of the partitioning framework showing the relationship between local connectivity and global search quality.

Our research reveals two fundamental properties of partitioned search graphs. First, partition effectiveness follows a power-law distribution with respect to size, with exponential decay in search quality beyond critical boundaries. This suggests an inherent limit to partition size optimization governed by graph structure rather than storage constraints. Second, maintaining search quality requires preserving k-hop neighborhoods around partition boundaries, with diminishing returns beyond k=3.

Three promising research directions emerge from our findings. Dynamic partition adaptation could enable real-time response to query patterns, with initial experiments showing potential 35% efficiency improvements. Investigation of theoretical bounds on cross-partition connectivity could establish fundamental limits for recall guarantees. Multi-modal graph partitioning presents opportunities for topology-aware partitioning strategies, particularly effective for heterogeneous graph structures.

Beyond vector search, our findings have broader implications for distributed graph systems. The demonstrated need to jointly optimize local structure and global access patterns challenges traditional approaches that treat these as separate concerns. This insight opens new avenues for research in distributed graph algorithms, from social network analysis to molecular graph search and distributed neural networks.

Johnson, J., Douze, M., & Jégou, H. (2019). Billion-scale similarity search with GPUs. IEEE Transactions on Big Data, 7(3), 535-547.

Subramanya, S. J., Devvrit, F., Kadekodi, H. K., Krishaswamy, R., & Simha, R. (2019). DiskANN: Fast accurate billion-point nearest neighbor search on a single node. Advances in Neural Information Processing Systems, 32.

Wang, J., Liu, W., Kumar, S., & Chang, S. F. (2020). Learning to hash for indexing big data—A survey. Proceedings of the IEEE, 104(1), 34-57.

Chen, J., Wang, Y., et al. (2021). Milvus: A purpose-built vector data management system. In Proceedings of the 2021 International Conference on Management of Data (pp. 2614-2627).

Guo, R., Sun, P., Lindgren, E., Geng, Q., Simcha, D., Chern, F., & Kumar, S. (2020). Accelerating large-scale inference with anisotropic vector quantization. International Conference on Machine Learning (pp. 3887-3896).

Malkov, Y. A., & Yashunin, D. A. (2020). Efficient and robust approximate nearest neighbor search using hierarchical navigable small world graphs. IEEE Transactions on Pattern Analysis and Machine Intelligence, 42(4), 824-836.